core insights box

- Multimodal search in eCommerce allows customers to search using text, images, and voice, offering a more convenient shopping experience.

- Multimodal search technology is essential for eCommerce as it meets the growing demand for smarter, more intuitive product discovery.

- By analyzing context, multimodal search helps in delivering highly personalized, relevant results, driving customer satisfaction and loyalt

- Experro's Gen AI-powered platform provides multimodal search integration capabilities for faster and more intuitive eCommerce experiences.

The time has come for shoppers to use various input methods to find their desired items while shopping online. They can type in, use a mic for voice-based search or even put reference images.

This is the potential of a multimodal search bar. As eCommerce continues to evolve, customers demand smarter, more intuitive ways to find what they’re looking for.

Multimodal AI search optimization revolutionizes product discovery by blending various input methods for accurate and context-rich results.

Let’s explore the meaning of multimodal search, how it works, and a lot more in this blog.

Multimodal Search Meaning & Definition

Multimodal search definition: Multimodal search is AI-powered search approach that combines multiple input types, such as text, voice, and images, to understand user intent more holistically and return more relevant, contextual results beyond traditional text based search.

Unlike traditional keyword search, multimodal systems use AI and machine learning to interpret each input type and connect them in a shared understanding, so results are matched by meaning, not just exact terms.

For example, a shopper can upload a product photo and add text like “similar style, but in black and under $200”. The system returns visually similar items that match the extra constraints, improving search relevance and accuracy.

What Are the Examples of a Multimodal AI Search?

Multimodal AI search enhances product discovery by combining different input types for more accurate results.

The following multimodal search examples show how integrating text, image, and voice can streamline the shopping experience.

1. Searching for a Fashion Item Using Text and Image

A user looking for a specific dress can upload a reference image while describing details like "red floral maxi dress with puff sleeves".

The search engine processes both inputs, matching the visual style with the textual description to deliver highly relevant results.

This enhances accuracy, especially when product variations exist in different colors, patterns, or styles.

2. Finding a Jewelry Piece with Voice and Image

A customer searching for an engagement ring can upload a picture of a design they like while saying, "Show me similar rings with a rose gold band".

The system analyzes both inputs, considering design elements, metal type, and gemstone shape to refine the results.

This makes it easier for shoppers to find jewelry that matches their preferences without needing multiple searches.

Read also - Product search vs product discovery

3. Discovering a Beauty Product Using Multimodal Search Queries

A shopper wants to find a lipstick shade they saw online. They upload a screenshot and add text like "matte finish, warm undertone, under $25".

The search engine matches the visual color and finish cues with product attributes like undertone, texture, and price to surface the closest shade matches.

This improves discovery when shoppers can’t describe a shade precisely or when brands use different naming conventions.

4. Finding a Furniture Item Using Image and Text

A customer is looking for a sofa similar to one they saw in a photo. They upload the image and add text like "3-seater, beige, modern style, stain-resistant fabric".

The system identifies visual features like shape, material texture, and color, then combines them with the shopper’s requirements to return visually similar options that meet size and fabric needs.

This helps shoppers move faster from inspiration to purchase in categories where visual match matters more than keywords.

5. B2B Product Search Using Image and Text (Parts or Components)

A procurement manager needs to reorder a component but only has a photo and a partial identifier. They upload the image and type "compatible with Model X, stainless steel, 40mm".

The search engine uses the image to identify the part type and combines it with technical attributes, compatibility signals, and spec filters to return the right SKU and approved alternatives.

This reduces back-and-forth and speeds up ordering when product names or part numbers are inconsistent across catalogs.

These were a few examples of multimodal search. Let us walk you through the working of the multimodal search algorithm in the next section.

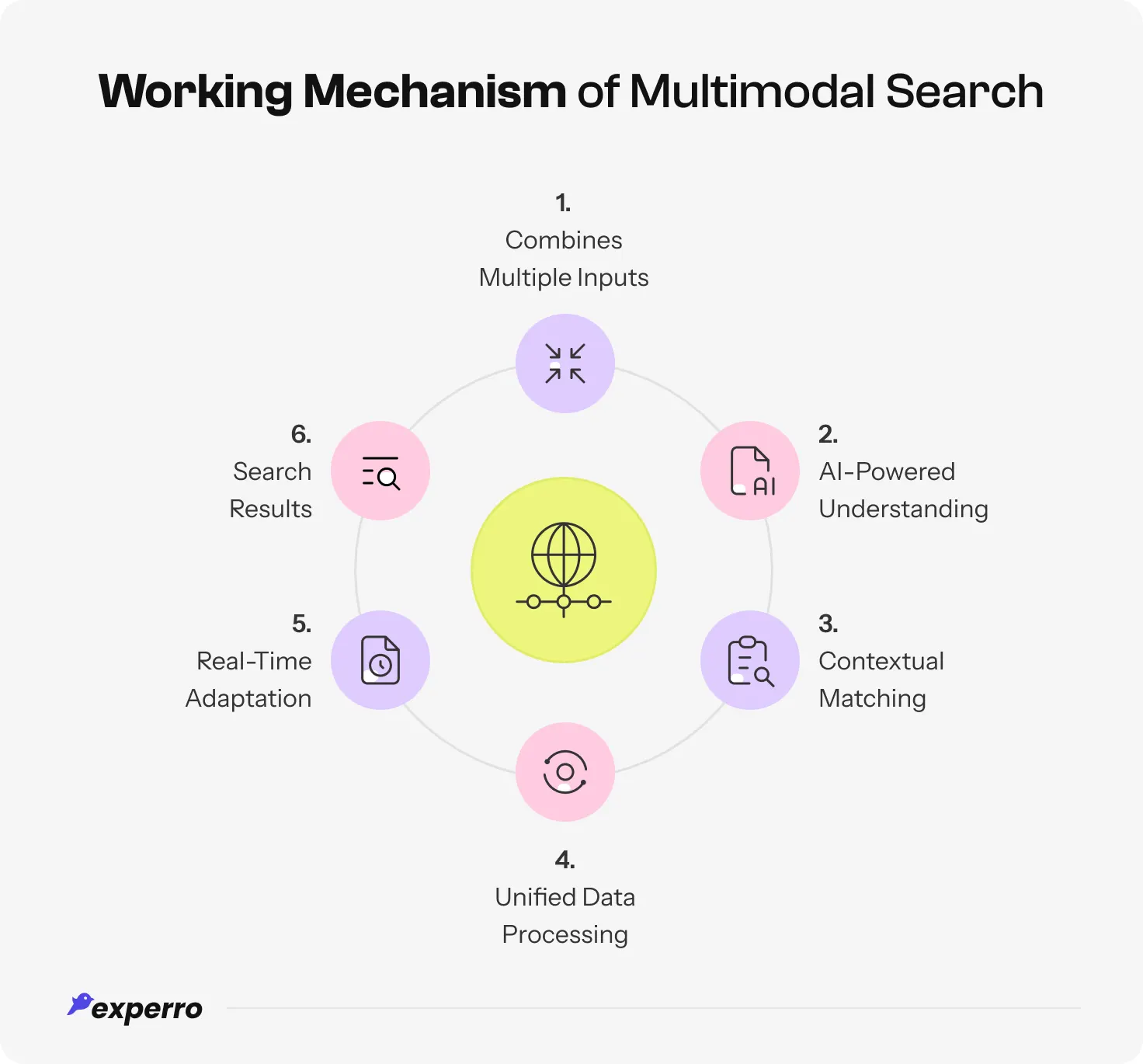

How Does Multimodal Search Work?

eCommerce multimodal search integrates advanced technologies to process and interpret diverse inputs logically.

Here’s how it works:

1. Integrating Multiple Inputs

Multimodal vector search processes various inputs like text, images, and voice simultaneously. For instance, a customer can describe a product verbally while uploading a reference image to refine the eCommerce search.

This synergy of inputs, with the help of fuzzy search, helps create a more accurate understanding of the query.

By leveraging multiple input methods like text, images, and voice, it adapts to different user preferences, ensuring both flexibility in search methods and precision in results.

2. Processing with Generative AI-Powered Understanding

Artificial Intelligence (AI) plays a pivotal role in interpreting inputs. It uses natural language search (NLS), image modalities, and voice recognition to understand the user’s intent.

Generative multimodal AI systems ensure that even vague or complex queries are handled efficiently. Furthermore, ML models continuously enhance the system’s ability to decode inputs over time.

This makes the search experience smarter and more intuitive, reducing the likelihood of irrelevant results.

3. Refining Results Through Contextual Matching

The system analyzes the context behind each input query to deliver neural search results. For example, it considers user preferences, past searches, and the relationships between the inputs to refine results further.

Multimodal semantic search ensures that the system doesn’t treat inputs in isolation, leading to more personalized results.

This capability is particularly valuable in eCommerce, where user context can significantly impact product recommendations and conversions.

4. Unified Data Processing

Multimodal product search engine operates on a unified platform that combines different data modalities. This eliminates silos and ensures that relevant information is processed holistically, enhancing accuracy and speed.

The unified data processing approach also simplifies backend operations, making it easier for businesses to manage and analyze large volumes of data.

This comprehensive processing ensures consistency in delivering results, no matter how complex or varied the inputs are.

5. Real-Time Adaptation

The system adapts to real-time inputs, dynamically updating search results as new information is added.

This ensures a fluid and interactive search experience for users. Real-time adaptation allows customers to refine their searches on the go, providing instant feedback and narrowing down options.

The agility in response makes the search journey more engaging and efficient, encouraging users to interact more with the platform.

6. Display Precise Search Results

Multimodal RAG delivers highly relevant results by combining AI-driven ranking, contextual analysis, and user intent recognition.

It intelligently filters and organizes results based on multiple input types, ensuring accuracy. Visual, textual, and voice-based queries are processed together to refine search outputs dynamically.

This precision in personalized search helps users find the most relevant products faster, improving engagement and conversions.

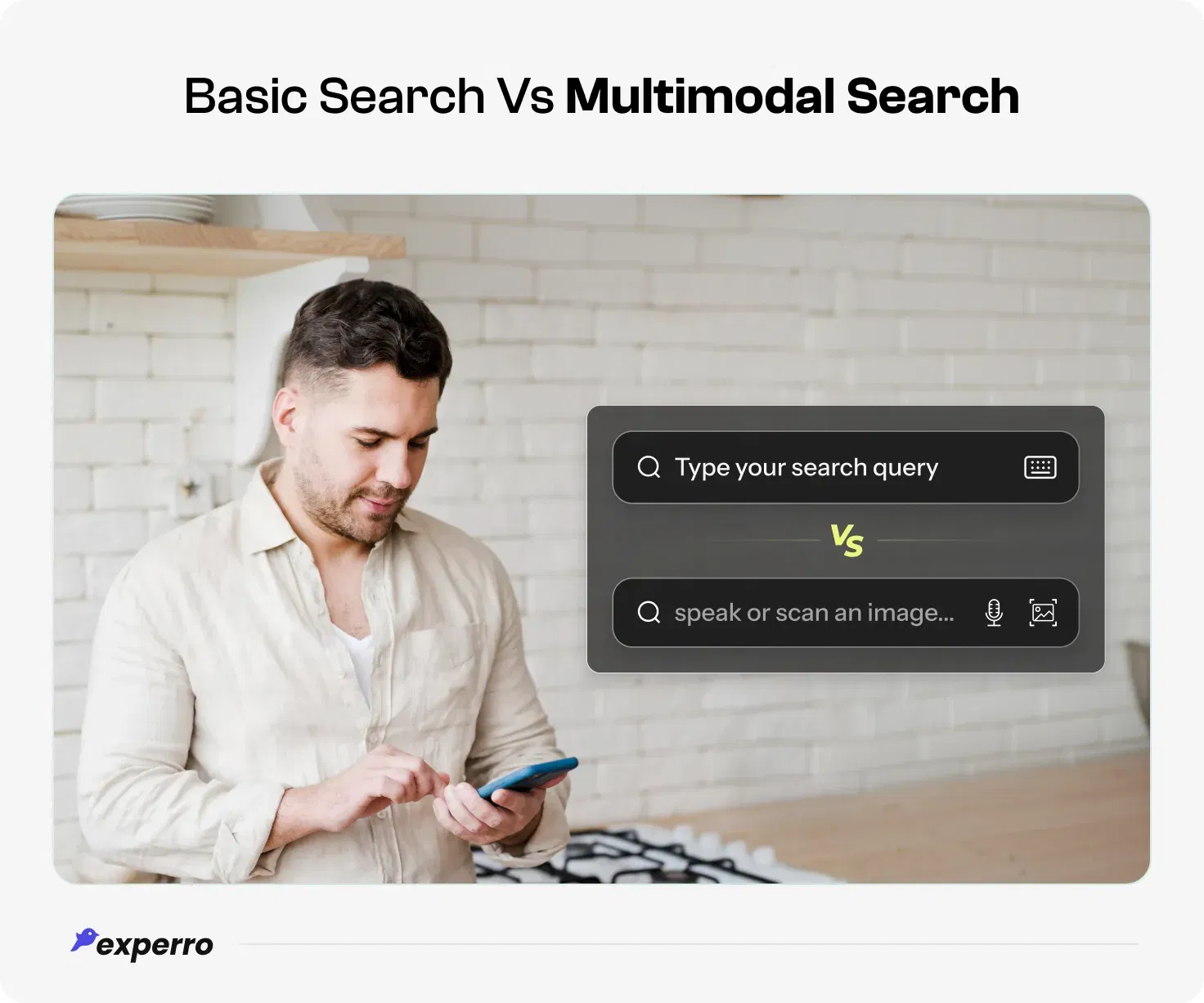

How Does Multimodal Search Differ from Basic Search Functionality?

Unlike basic search, which typically relies on a single input type (only text), multimodal AI search processes multiple inputs simultaneously for precise results.

It is more intuitive and context-aware, delivering results that align closely with user intent.

Below is a table highlighting the differences between basic text-based search functionality versus the multimodal search:

Feature | Basic Search | Multimodal Search |

|---|---|---|

| Input Type | Single input (usually text) | Multiple inputs (text, image, voice, etc.) |

| Context Awareness | Limited context, based on keywords | Context-aware, processes multiple factors |

| Result Accuracy | Matches based on keywords only | Matches based on richer data inputs |

| User Experience | Static, one-dimensional | Dynamic, personalized & interactive |

| Flexibility | Limited to text queries only | Supports diverse search methods (text, image, voice) |

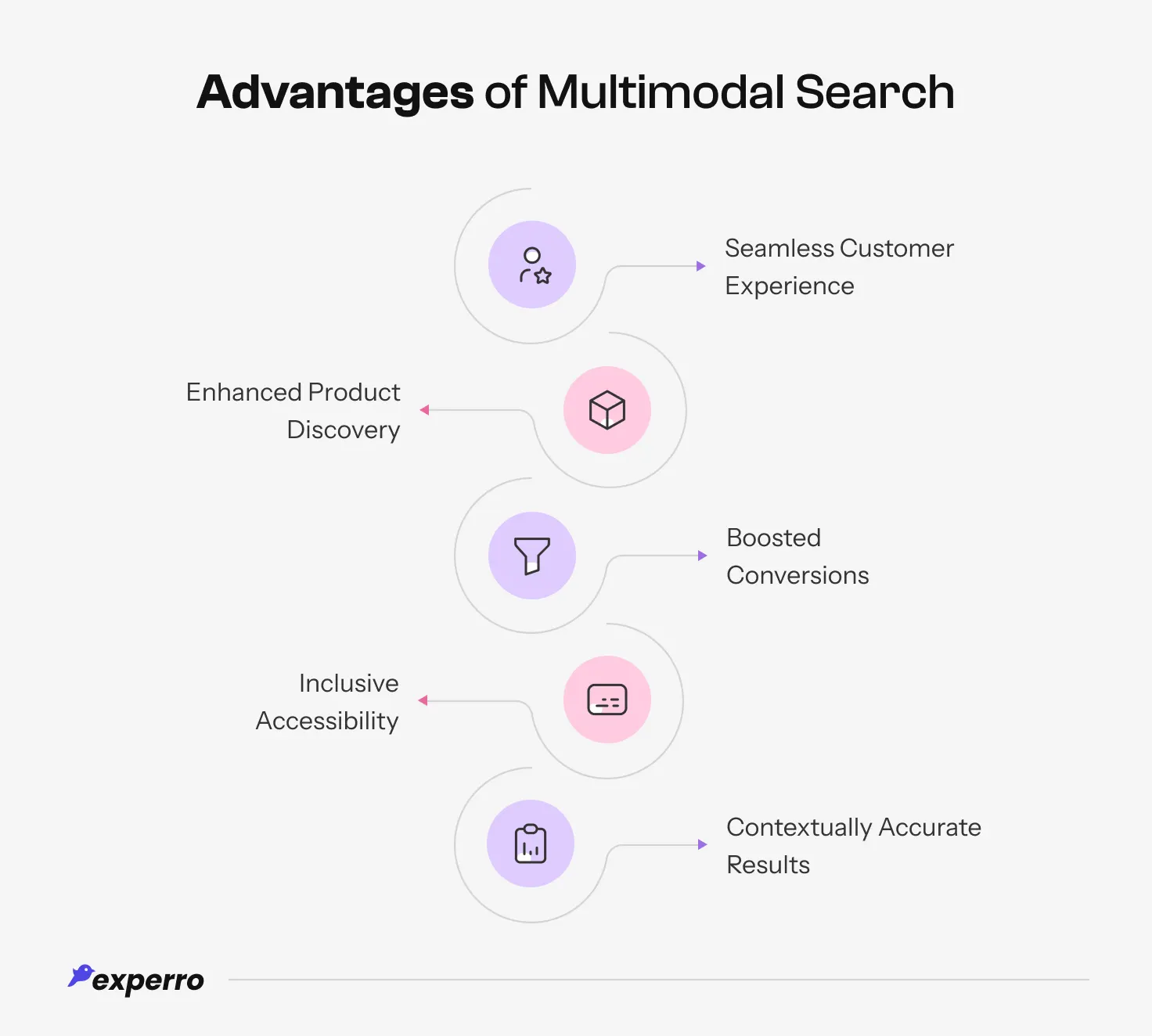

What Are the Benefits of Multimodal Search in eCommerce?

Leveraging large language models for multimodal search brings transformative benefits to eCommerce platforms, creating value for both businesses and customers.

Let's explore the advantages in detail:

1. Seamless Customer Experience

By combining different modalities and input methods, eCommerce facilitates multimodal AI search that caters to varying customer preferences.

Users can search in the way they find most convenient, leading to a smoother, frustration-free experience. It reduces the effort required to find products, enhancing overall user satisfaction.

Whether it’s typing, speaking, or uploading an image, customers can find and discover products throughout their search journey. This convenience fosters loyalty and repeat visits.

2. Enhanced Product Discovery

Customers can find products more easily with context-rich searches. For instance, pairing an uploaded image with more inputs narrows down the options significantly, making multimodal product discovery faster.

Building multimodal search and RAG (Retrieval-Augmented Generation) bridges the gap between customers' vague ideas and relevant products.

It allows retailers to surface hard-to-find or niche items, ensuring no product goes unnoticed.

3. Boosted Conversions

Customers are more likely to purchase when they find what they’re looking for quickly and accurately.

eCommerce multimodal search eliminates barriers to conversion by improving relevance and usability. By reducing the time to decision, it minimizes cart abandonment and boosts impulse purchases.

Additionally, personalized results encourage customers to explore complementary products, increasing average order value.

4. Inclusive Accessibility

This search technology supports diverse user needs. For instance, visually impaired users can rely on voice search, while others might use multimodal image search to bypass language barriers.

Mobile search with multimodal queries further supports users in multilingual or global markets, where language and mobile devices can otherwise be a barrier to entry.

By catering to everyone, businesses expand their customer base effortlessly.

5. Contextually Accurate Results

By analyzing multiple inputs and their relationships, multimodal RAG ensures results are tailored to the user’s specific intent, leading to higher satisfaction.

It reduces irrelevant results, saving customers time and effort. The LLM in eCommerce learns from user behavior to refine accuracy, providing ever-improving experiences.

This depth of understanding fosters their trust in the search process, as customers feel understood and valued.

Curious How Multimodal Search Works on Your Store?

See how Gen AI search brings together text, images, and voice to understand shopper intent and deliver more relevant results.

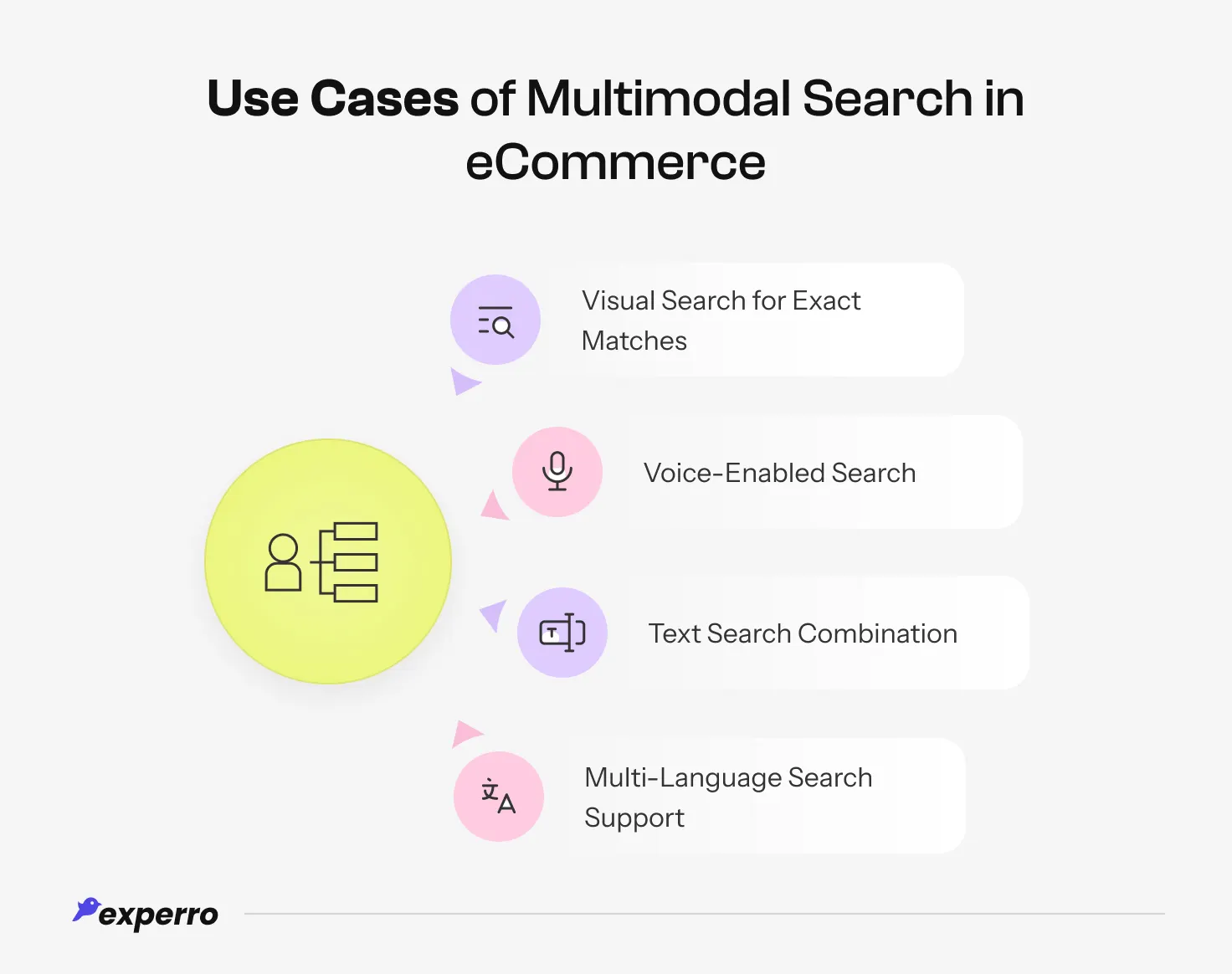

How Do Leading Retailers Use eCommerce Multimodal Search? Key Use Cases Explained!

Here are some multimodal search use cases:

1. Visual Search for Exact Matches

Customers can upload images to find products with similar features. This is particularly useful for fashion and home decor, where visual details matter significantly.

For example, shoppers can upload photos of a dress they saw online or a wooden table from a furniture eCommerce website that matches their home’s aesthetic.

Visual search via images reduces the need to describe products manually for the customer and accelerates the discovery process.

2. Voice-Enabled Search

Voice search allows users to describe what they’re looking for verbally. This is ideal for on-the-go shoppers or those who prefer hands-free interaction.

It is particularly helpful for complex queries, like “Find me a black jacket under $100 available in medium size”.

Voice-enabled searches also make eCommerce more inclusive for users with physical limitations.

3. Text Search Combination

Pairing text with image search or other inputs—like a voice command—provides more detailed and accurate results.

For example, searching for “red sneakers” while uploading a photo of a specific design. This combination creates a richer context, helping shoppers find exact matches faster.

It’s especially useful for niche items or when customers aren’t sure how to describe their desired product.

4. Multi-Language Search Support

By supporting searches in multiple languages, this technology ensures accessibility for global audiences, breaking down language barriers.

It allows retailers to cater to diverse markets, enhancing their international reach. Whether typing, speaking, or uploading images, users can search confidently in their preferred language, improving conversion rate optimization and inclusivity.

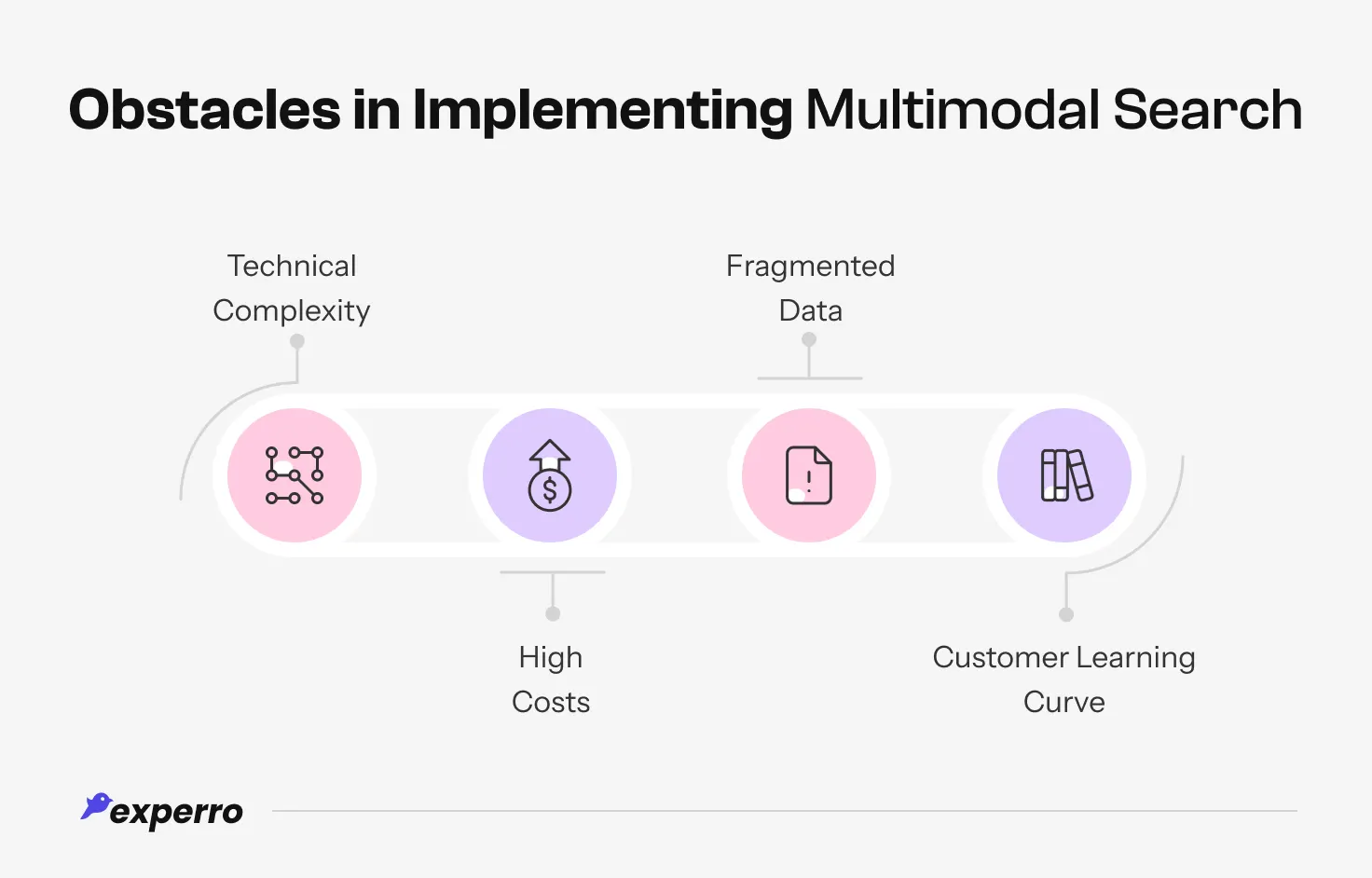

What Are the Key Challenges You Might Face in Adopting Multimodal Search? (And Their Solutions!)

Adopting multimodal eCommerce search comes with its own set of challenges.

Let’s diagnose the challenges to perform multimodal search on your platform in the section below.

1. Technical Complexity

Integrating multiple technologies like NLP, image recognition, and voice processing requires significant technical expertise and resources. The complexity increases with the need to ensure smooth interoperability across these systems.

However, Experro reduces this technical burden by bringing AI-powered search and conversational technologies together, enabling businesses to deliver seamless conversational experiences without heavy engineering effort.

2. High Costs

The advanced infrastructure and development costs for implementing advanced search can be a barrier, especially for smaller businesses.

In this case, Experro offers a scalable solution that help businesses access multimodal AI search without the high upfront costs, making it affordable for both large and small businesses.

3. Fragmented Data

Ensuring data from various sources is unified and clean for processing can be difficult, particularly for businesses with legacy (old) systems. Poor data management can affect the accuracy of search results.

To address this, Experro’s AI-powered platform unifies fragmented data and ensures smooth integration, providing accurate, context-aware search results while minimizing zero search results.

4. Customer Learning Curve

Introducing new search methods may require educating customers, as they might initially struggle to use the feature effectively. Customers may resist new multimodal search interfaces or technologies if they’re not familiar with them.

To help with this, Experro provides intuitive, user-friendly search interfaces that ensure smooth adoption, allowing customers to easily transition and engage with multimodal databases.

The global multimodal AI market is projected to reach $98.9 billion by 2037.

Transform Your e-store with Experro’s Next-Gen Multimodal Search Engine!

Experro’s agentic experience platform is built to streamline and elevate the multimodal search experience of your e-store.

With Gen AI-powered search at its core, the platform capabilities built-in advanced technologies like image recognition and real-time data processing to create a seamless and dynamic shopping journey.

By combining these capabilities, Experro ensures that your customers benefit from fast, accurate, and hyper-personalized search results, transforming your e-store into a more intuitive, customer-focused platform.

This ongoing innovation helps keep your business at the forefront of the eCommerce experience.

See the Impact of Multimodal Search on Your Business

Adopt Experro & provide your customers with a high-performance multimodal search experience!

Conclusion

Multimodal search is reshaping how customers interact with eCommerce platforms, offering unmatched accuracy and ease.

By adopting it, businesses can stay ahead of the competition and deliver the seamless experiences today’s customers demand.

With Experro’s support, integrating eCommerce multimodal AI search is simple and effective. Contact us on a call to learn how we can help elevate your eCommerce platform with cutting-edge multimodal search capabilities.

FAQs

How does multimodal search query improve eCommerce experiences?

This reduces friction in product discovery, leading to faster and more relevant results.

By analyzing multiple input types, it understands user intent better, even when keywords are unclear.

It also enhances accessibility, enabling diverse users—including those with certain disabilities—to navigate and shop effortlessly.

Can small businesses implement multimodal search?

Yes, with the right tools and platforms like Experro, even small businesses can adopt multimodal search algorithms effectively.

How is Experro DXP unique in offering multimodal search?

Experro's multimodal search combines AI-driven insights, unified data, and scalability to deliver a seamless multimodal search experience.

With real-time indexing and intelligent ranking, it delivers highly relevant results instantly. Experro’s AI-powered personalization further refines search outcomes based on user behavior and different preferences.

What is the future of multimodal search in eCommerce?

The future lies in more intuitive, accessible, and personalized search experiences, with multimodal AI search becoming a standard feature for online stores.

What is a multimodal search?

Multimodal search is a search approach that understands and retrieves results from multiple input types, such as text, images, and voice. In eCommerce, a multimodal search engine can interpret a typed query, a spoken request, or an uploaded product image and still return relevant products based on meaning, not just keywords.

Many modern systems use a multimodal LLM to connect these inputs into one shared understanding, which helps the multimodal search system deliver more accurate results even when shoppers cannot describe what they want perfectly.

How do we optimize for multimodal AI search experiences?

To optimize for multimodal AI search experiences, focus on the foundations that improve understanding across formats and keep relevance consistent.

-

Strengthen product data: Maintain clean titles, descriptions, attributes, and structured metadata so the multimodal search system can interpret intent reliably.

-

Improve image quality: Use consistent angles, lighting, and backgrounds, and add descriptive alt text to support stronger visual matching.

-

Enable intent-based relevance tuning: Prioritize the right signals, such as category context, availability, price ranges, and shopper behavior, to sharpen multimodal search capabilities across text, voice, and image journeys.

-

Test each modality separately: Track performance for text, voice, and image search so you can identify where the multimodal search engine needs better data, ranking, or refinements.

-

Monitor and iterate: Use search analytics like zero-results rate, refinement rate, and search-to-PDP time to continuously improve relevance and usability.

What are some popular use cases for multimodal AI solutions?

Popular multimodal AI solutions focus on faster discovery, better relevance, and fewer dead ends across high-consideration categories.

-

Visual product discovery: Shoppers upload an image to find similar products, such as furniture, fashion, or decor.

-

Voice plus filters: Customers speak a query like “black running shoes under $100” and refine results instantly with attributes.

-

Cross-modal refinement: Start with an image search, then narrow with text like “same style, but leather” for higher precision.

-

Customer support and guided selling: A multimodal LLM can interpret product photos, questions, and context to recommend the right items or troubleshooting steps.

-

B2B parts search: Buyers upload a component image and add specs or compatibility text to locate the correct SKU in a large catalog.

What are the three types of multimodal?

In most commerce and search contexts, the three common types of multimodal inputs are:

-

Text, such as typed queries, product names, or attribute keywords

-

Images, such as uploaded photos, screenshots, or scanned labels

-

Voice, such as spoken queries or conversational requests

A strong multimodal search system combines these inputs into one retrieval and ranking flow, so the experience feels consistent regardless of how a shopper searches.

Pallavi Dadhich

Content Writer @ ExperroPallavi is an ambitious author recognized for her expertise in crafting compelling content across various domains. Beyond her professional pursuits, Pallavi is deeply passionate about continuous learning, often immersing herself in the latest industry trends. When not weaving words, she dedicates her time to mastering graphic design.

What's Inside

- Multimodal Search Meaning & Definition

- What Are the Examples of a Multimodal AI Search?

- How Does Multimodal Search Work?

- How Does Multimodal Search Differ from Basic Search Functionality?

- What Are the Benefits of Multimodal Search in eCommerce?

- How Do Leading Retailers Use eCommerce Multimodal Search? Key Use Cases Explained!

- What Are the Key Challenges You Might Face in Adopting Multimodal Search? (And Their Solutions!)

- Transform Your e-store with Experro’s Next-Gen Multimodal Search Engine!

- Conclusion

Subscribe to Our Newsletters

Get the latest insights delivered straight to your inbox.